Artificial intelligence is changing cybersecurity, but not always to the good. Alongside the powerful defense tools AI is driving a new wave in cybercrime. One of the most obvious and alarming instance of this is deepfakes artificially-generated videos voice recordings, images, or even voices that imitate human beings with terrifying accuracy. While they were once a novelty, deepfakes are now the mainstay of fraud, scams and disinformation.

This article explains the ways that deepfakes and artificial intelligence are changing the face of cybercrime by 2025, the dangers for both organizations and individuals and the steps that can be taken to combat the threat.

What Exactly Are Deepfakes?

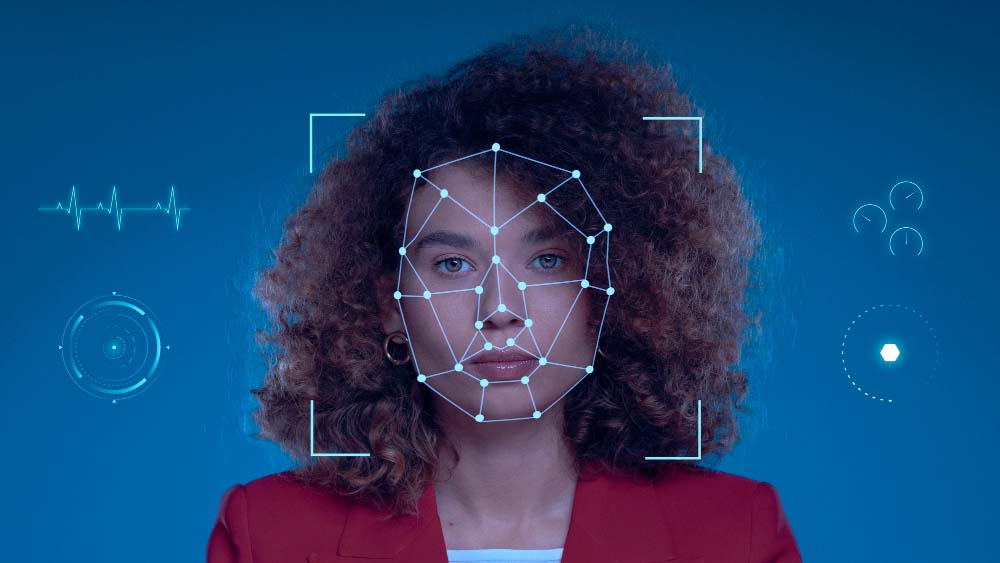

Deepfakes are fake media created by using AI techniques like deep-learning and the generative adversarial network (GANs). They can convincingly reproduce an individual’s appearance and mannerisms, voice, or appearance which makes it difficult for viewers to differentiate fake from authentic.

-

Video fakes deep: Face-swaps or entirely fake videos of people speaking or performing.

-

Voice deepfakes Artificially-trained voice models that duplicate the speech patterns of a person.

-

Images manipulation Images that have been created and altered using AI to make them look like someone else or embarrass.

Although there are legitimate applications (film entertainment, accessibility, etc.)) however, similar technology is utilized by criminals.

How Cybercriminals Are Exploiting Deepfakes

1. Business Email Compromise 2.0

Email scams of the past are now amplified by AI. Imagine an executive “appearing” in a video chat or making a voice call to instruct staff to sign off on wire transfers. Employees are much more likely to follow through when they can see or hear the same thing as their boss.

2. Scams and fraud in the financial sector

Deepfakes are used by attackers to pretend to be family members, government officials or bank executives. A voice message from”a “daughter who is stuck abroad” or an online message from an “loan officer” can create urgency and confidence.

3. Social engineering at scale

AI can help attackers automatize social engineering campaigns. Instead of sending out generic phishing messages criminals can develop unique highly persuasive content targeted at particular targets, that includes video or voice following-ups.

4. The manipulation of reputation and political power

fake speeches, interviews, and “leaked” videos may damage reputations or influence public opinions. In 2025 the line between cybercrime and misinformation is become blurred. Deepfakes are utilized for economic gain as well as to influence ideologies.

5. Identity theft and account takeovers

Biometric authentication is becoming increasingly popular. Video or audio that is fake can be used to evade systems that depend on voice and facial recognition. This opens up new attack areas for criminals.

Why Deepfakes Are So Dangerous

-

They make use of confidence. Humans naturally trust faces and voices much more so than words.

-

They are cheap to scale. Once trained, AI models can produce unlimited content.

-

They undermine the confidence. Even legitimate media are questioned (“the”liar’s dividend”) and this can undermine confidence in communications.

-

They can be combined with other frauds. Deepfakes aren’t standalone They enhance ransomware, phishing and social engineering.

Real-World Examples (High-Level)

-

Voice-cloning frauds: Banks have disclosed instances where fraudsters made use of AI to impersonate a customer’s voice to approve transfer of funds.

-

Fake executive phone calls: Companies have lost millions of dollars after employees followed instructions derived from convincing fake video or audio.

-

Politics disinformation Videos of fake candidates were circulated prior to elections, causing platforms and governments to come up to verify their claims.

These aren’t just isolated eventsThey’re trends that are growing.

Defensive Strategies Against Deepfake-Enabled Crime

For Individuals

-

Be wary of any the media you use: Verify unusual requests even if they seem authentic.

-

Utilize a secondary verification method: If a family employee or boss needs urgent assistance via video or call then confirm the request via another channel.

-

Increase the security of your accounts: Use strong authentication over biometrics (MFA or key to secure).

-

Keep up-to-date: Follow reputable sources that warn of emerging frauds.

For Businesses

-

Create verification procedures: Require multi-person approval to make financial transfers. One voice or video will suffice.

-

Training in Awareness: Teach staff how deepfakes can be used to aid in the social engineer.

-

Utilize detection tools: Use AI-driven deepfake detection and monitoring of anomalies.

-

Secure Communications: Encourage encrypted, authenticated channels for communication.

For Policymakers & Platforms

-

Regulators on the misuse of HTML0 More robust legal frameworks to sanction the distribution and creation of fakes that are malicious.

-

Standards for content authentication: Watermarking or provenance signals to authentic digital content.

-

International collaboration: Deepfake crimes often traverse borders, and require global cooperation.

The Cat-and-Mouse Reality

Deepfakes aren’t solely a technology issue, they’re a security issue. As the tools to create fake media get easier and more affordable, security experts have to adapt quicker. However attackers will continue to mix deepfakes with ransomware, phishing and fraud.

Also, in other words:

-

Attackers invent to sabotage trust.

-

Defenders need to innovate to regain trust.

How to Stay Ahead

-

Assume “seeing isn’t believing” in digital spaces.

-

Implement zero-trust communications policies in the business environment.

-

Help support AI study and develop tools which detect manipulation.

-

Learn cybersecurity basics MFA, secure passwords, be skeptical of urgent requests.

Closing Thoughts

AI or deepfakes is changing the cybercrime playbook. The traditional guidelines of “don’t click on suspicious links” remain relevant but they’re not enough. The current reality is that criminals can appear and sound exactly like anyonesuch as you boss, kid and even your president.

A good defense doesn’t require fear — it’s planning. By combining technological defenses as well as organizational policies and a general awareness We can ensure deepfakes aren’t a problem rather than a major catastrophe.